AI for insect detection & classification

A simple, visual walkthrough of how AI can spot and identify insects using motion detection and YOLO.

After conducting the AI for Insect Detection & Classification Workshop

This blog post is designed for readers with little to no coding experience. The goal is to explain how simple motion detection and modern object detection models like YOLO

I hope you find this exploration both accessible and interesting!

Introduction

Step into a field at dawn, and you’ll hear a quiet symphony of tiny wings.

Some belong to helpful pollinators 🐝, while others are hungry pests 🐛.

Catching these insects early can protect crops, reduce chemical sprays, and help us understand how seasons and climate shape insect life. But how do you do that without draining batteries or overwhelming tiny edge devices?

We use a two-step camera system that’s smart and simple:

- Notice insect motion — “Something moved.”

- Detect pests with YOLO — “Which insect is it?”

Let’s dive into how motion spotting and fast object detection help keep a watchful eye on the fields — one tiny wingbeat at a time.

Motion detection for insects

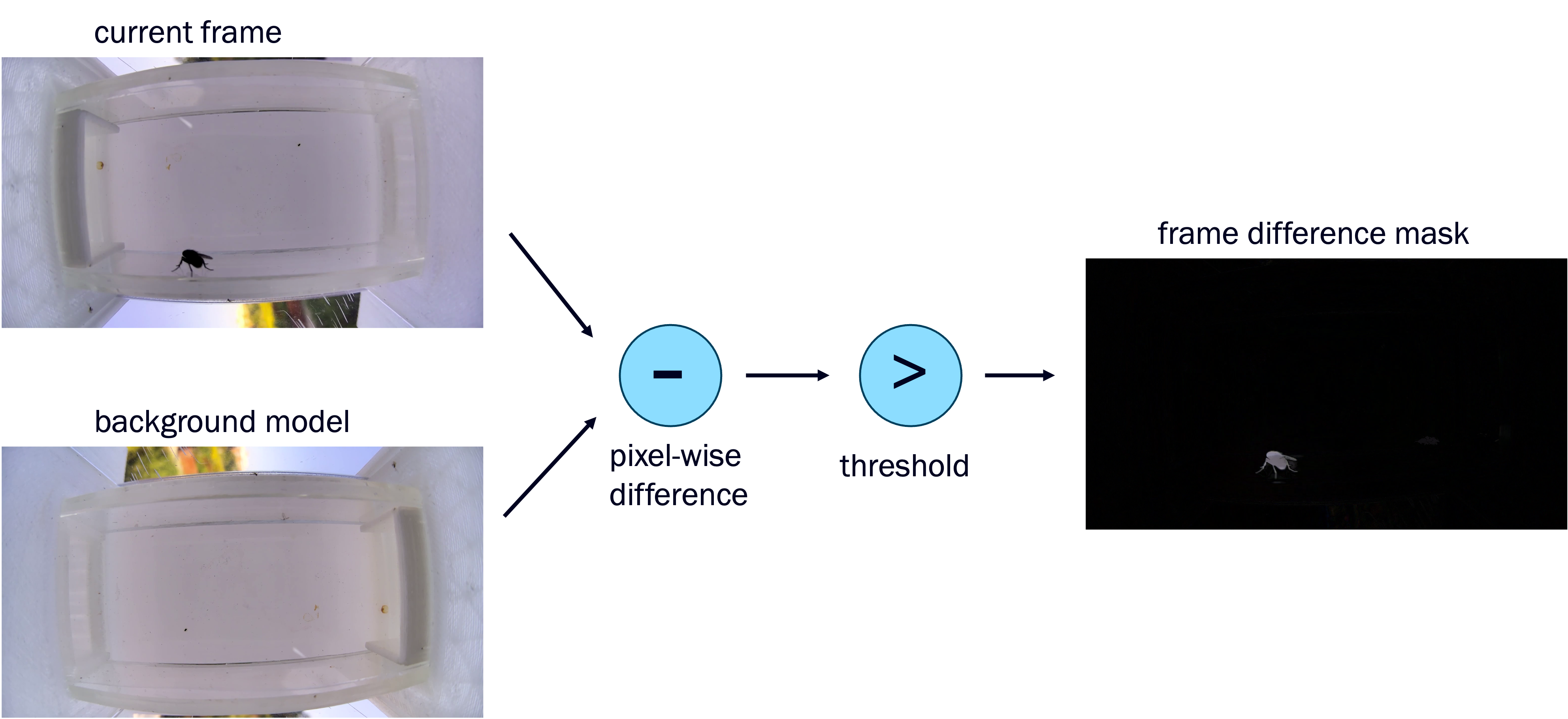

When a camera sits still, most of the scene stays the same. If a handful of pixels suddenly change, that signals motion. Here’s a simple background subtraction method to pick out moving insects:

- Build a background model

Watch the scene for a few seconds with no insects, then average those frames to create a ‘clean’ background image. Alternatively, use a single snapshot without any bugs. Fig. 2: Background model for our video sequence.

Fig. 2: Background model for our video sequence. - Frame difference

- Subtract the background model from the current frame to get a difference image.

- Large pixel differences usually mean motion.

- Threshold the difference image into a black-and-white motion mask (white = moving pixels; black = background).

Motion detection is all around us. Here are a few real-world examples:

- Security cameras & intrusion alarms

- Sports highlights

- Wildlife monitoring (trail cams)

- Traffic flow analysis

Insect detection with YOLO

Motion detection points out where to look, and YOLO tells us what is moving. YOLO

- Real-time: Runs at video speed, even on small devices.

- All-in-one: Finds both location and the insect’s identity in a single pass.

- Open-source: Free models (YOLOv8) and easy training tools are available.

We now describe the original version of YOLO. Although many improvements have been made across subsequent versions, the core ideas outlined below remain the same.

The quick YOLO tour

- Candidate frames

After motion detection, we extract snapshots that might contain insects. Fig. 4: Input image for insect detection, obtained from motion detection.

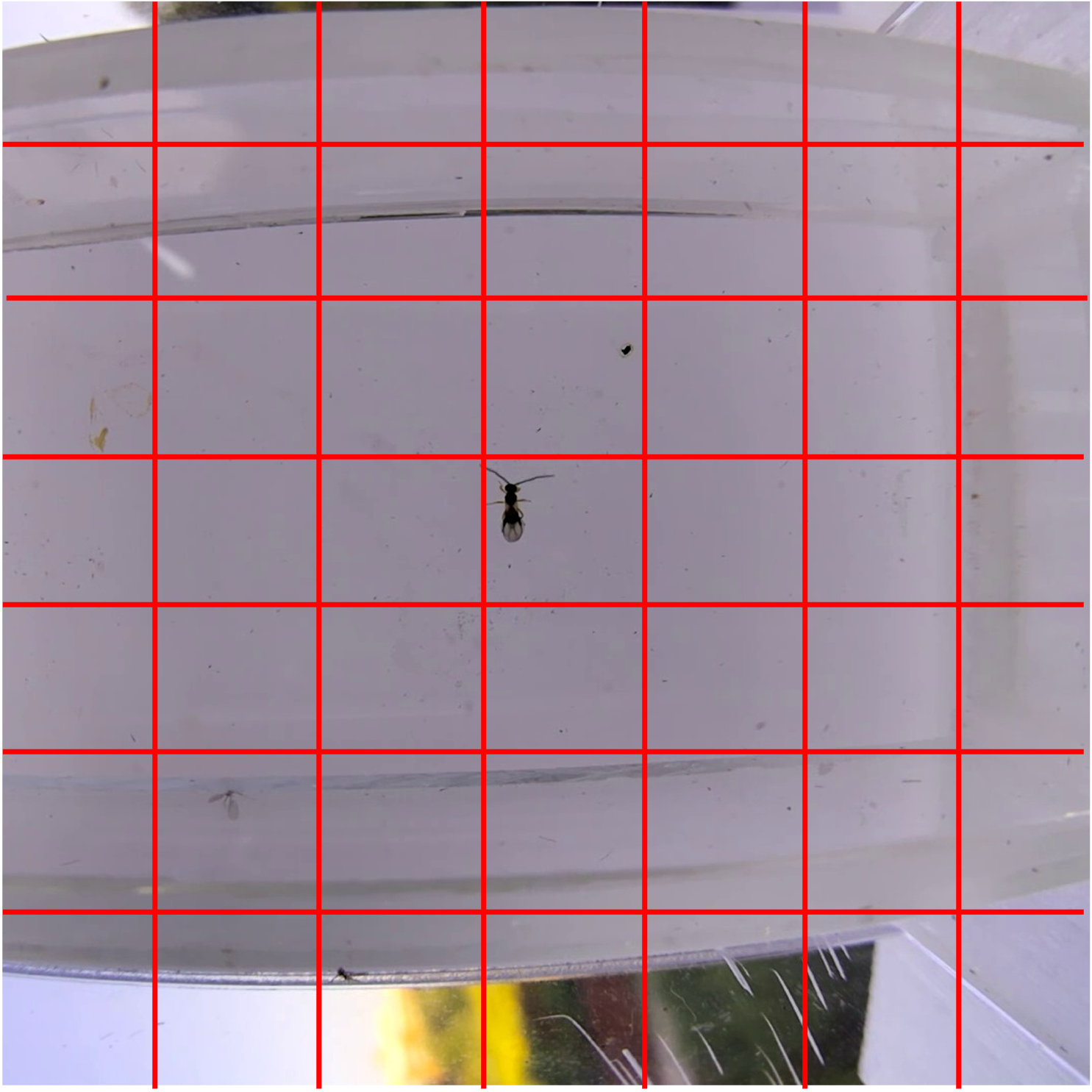

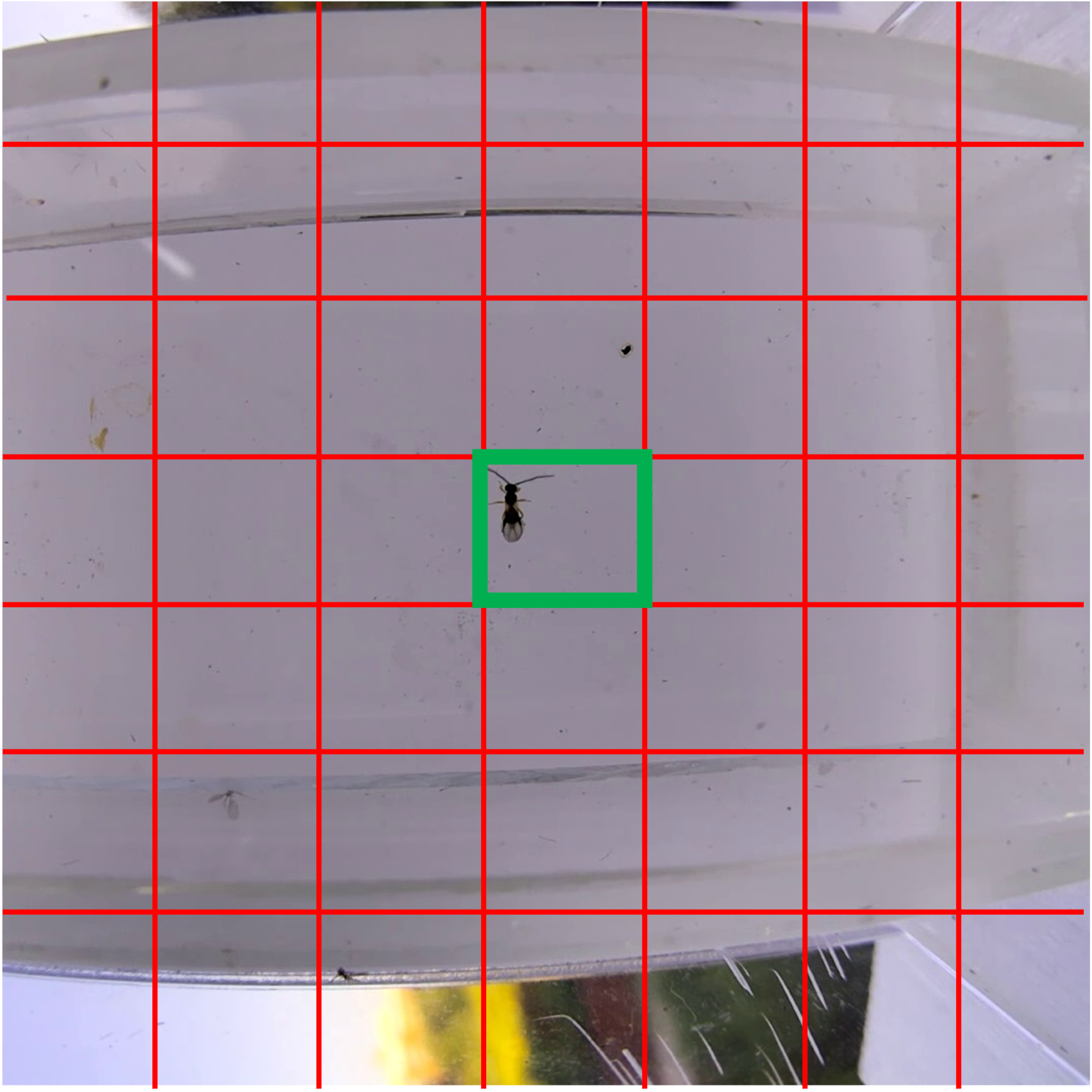

Fig. 4: Input image for insect detection, obtained from motion detection. - Resize & grid

Resize each snapshot to 448×448 pixels and overlay a 7×7 grid. Fig. 5: A 7×7 grid overlaid on the cropped image.

Fig. 5: A 7×7 grid overlaid on the cropped image. - One glance, many predictions

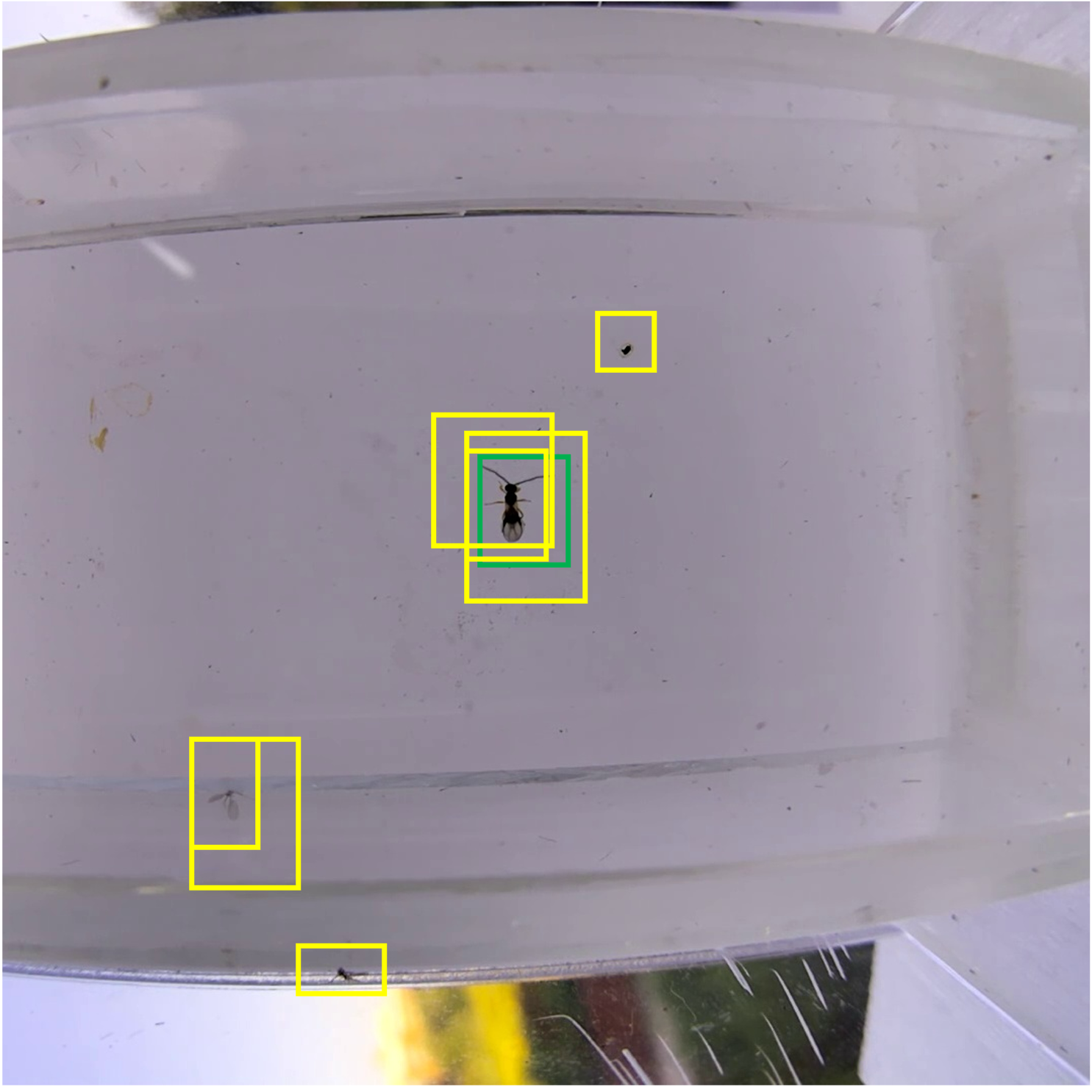

For each grid cell, YOLO’s neural network predicts several bounding boxes, confidence scores, and class probabilities for different insects. Fig. 6: The green box has a high confidence score and class probability; the red boxes are low confidence.

Fig. 6: The green box has a high confidence score and class probability; the red boxes are low confidence. - Keep the best

Non-Maximum Suppression (NMS)keeps the highest-scoring box and removes overlapping, lower-scoring ones.  Fig. 7: NMS removes duplicate boxes, leaving one clear box per object.

Fig. 7: NMS removes duplicate boxes, leaving one clear box per object.After predicting many boxes, NMS:

- Keeps the box with the highest confidence score.

- Removes boxes that overlap too much (measured by Intersection over Union, or IoU).

This leaves one clean box per insect. In our diagram, the green box remains and the yellow ones are discarded.

- Final output

We get the insect’s class, a confidence score, and the bounding box coordinates.

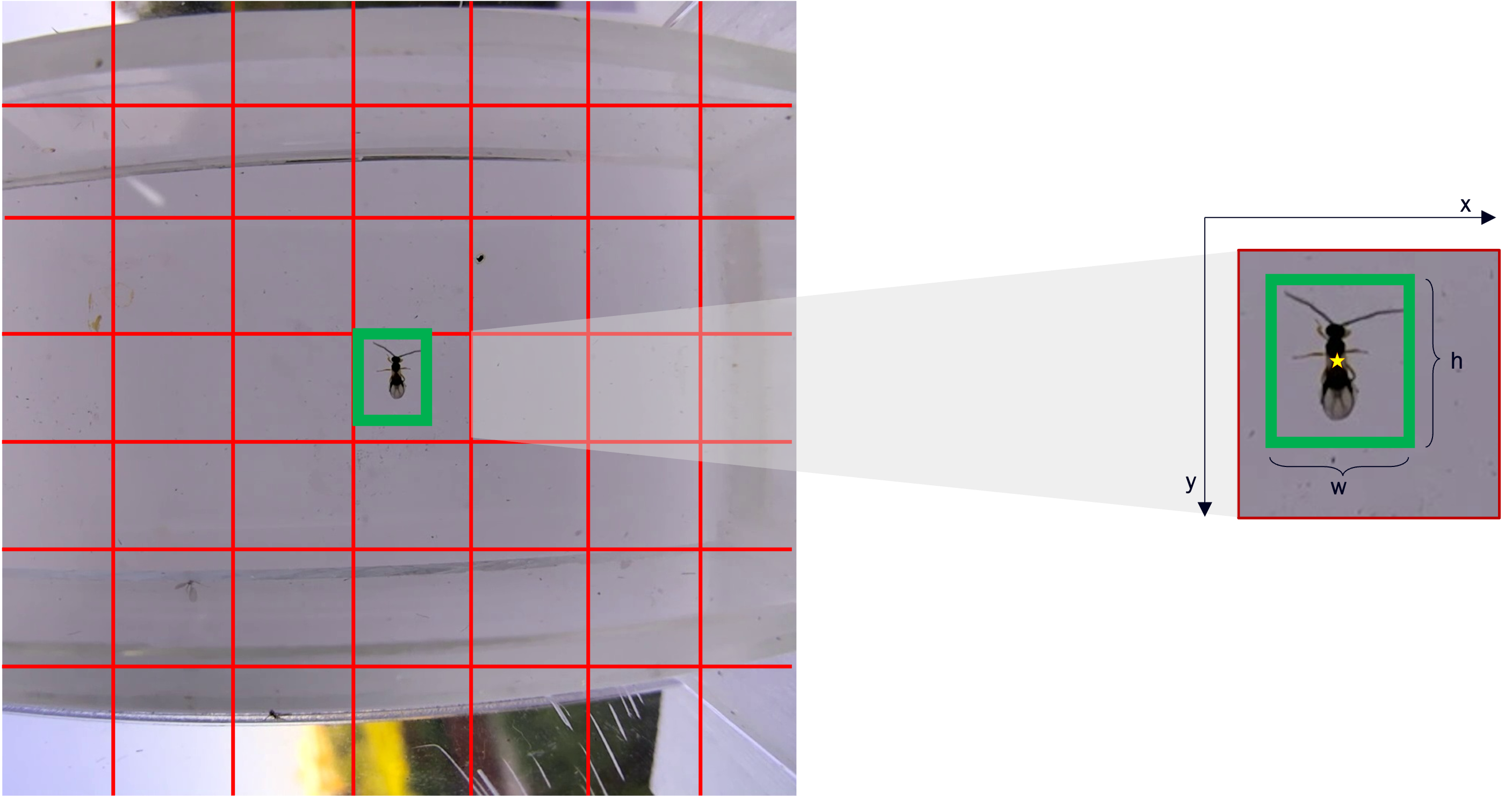

You might be wondering:

- How are the bounding boxes represented?

Fig. 8: Bounding box representation.

Fig. 8: Bounding box representation.- Each box is defined by (cx, cy, w, h).

- (cx, cy) is the box center, normalized within its grid cell.

- (w, h) is the width and height, relative to the full image.

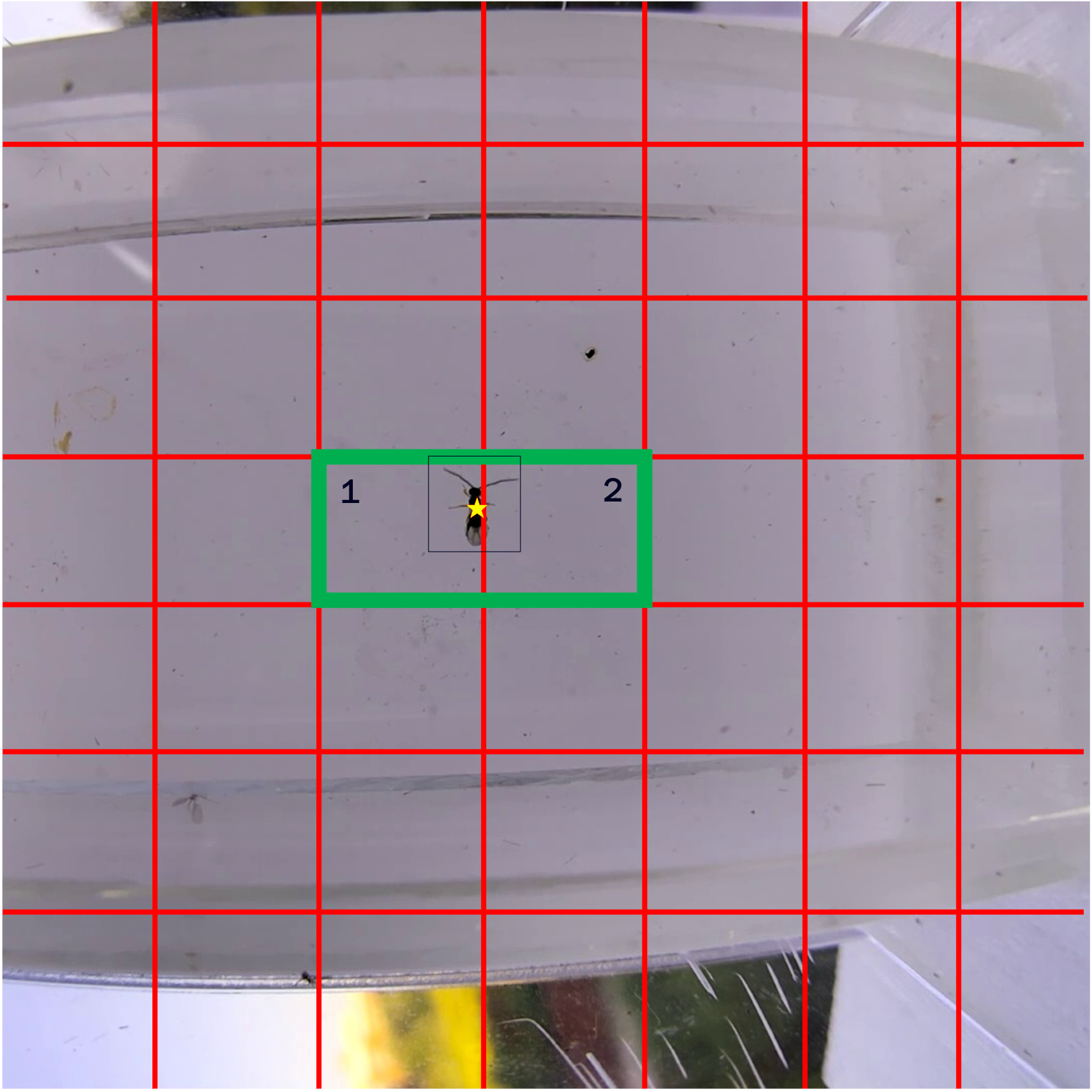

- What if the insect overlaps two grid cells?

Fig. 9: Handling overlapping grid cells.

Fig. 9: Handling overlapping grid cells.- Only the cell (marked as 1) containing the insect’s center (marked by a star) makes the prediction.

- Neighboring cells ignore this insect and focus on objects whose centers lie inside them.

Conclusion & next steps

Combining motion detection with YOLO creates a nimble tag-team. On a 90,000-frame video, motion detection trimmed the candidates down to just 1,000 frames. This shows why pairing motion and object detection is essential for bio-monitoring systems that run on tight compute and energy budgets.

Next on our roadmap:

- See even smaller bugs — add a zoom lens or train YOLO with clearer close-ups.

- Dashboards for farmers — stream live counts to a phone app for same-day action.

Have ideas, questions, or cool bug clips? Drop a comment below — we’d love to chat! 🪲👋